In the city of Mandurah on the west coast of Australia, with its beaches, estuaries, wetlands, and canals, you’re never far from the sights and sounds of water. Paul Bourke recently used Vuo to convey the familiar sights and sounds in an entirely new way: a fulldome installation called the Hydrodome that “focuses on our community’s connection to our waterways from Mandurah’s ancient Bindjareb heritage to 21st century recreation.”

Commissioned by the City of Mandurah for the Stretch Arts Festival 2018, the Hydrodome has an unusual arrangement. “Unlike a normal dome the audience is on the outside of the dome and the interior contains the projection equipment,” Paul explained. “The dome is 4m in diameter, the best viewing is from further than 3m away. As such the audience can be thousands.”

The Hydrodome debuted at Kaya – Party on the Square on the evening of October 12. Paul and his collaborators are continuing to develop it, incorporating stories and recordings from community members, for display at the Stretch Arts Festival in 2018.

An integral part of the installation is Vuo’s Warp Image with Projection Mesh node. In fact, it was Paul who developed the image warping technique and mesh file format used by this node.

I interviewed Paul by email to learn about the making of the Hydrodome.

What sound and video were played on the dome?

The video works were created by David Carson, a Fremantle based artist. The audio was created by Justin Wiggan who resides in the UK. The video was recorded using a few different techniques, some literal segments such as that of a local dancer Bernadette Lewis, these were filmed with a Panasonic GH5 and fisheye lens, others stem from the artists’ ongoing exploration of slit scan techniques.

What were the challenges and rewards of capturing and presenting the video in 360 degrees as opposed to the traditional flat screen?

Given that both David Carson and myself have been active in the fulldome world for some time, there were not any particularly new challenges for us. Most people struggle with conceptual issues such where things will appear on the surface and so where to point the camera, typically straight up. The next challenge is usually the equipment, I already have a number of fisheye lenses and fortunately for another project I have a couple of the new Panasonic GH5 cameras which are capable of over 4K resolution video. With one of the fisheye lenses this resulted in a 2K high fisheye image, a good match for the final resolution of the dome. The domes were acquired by the Mandurah City Council and they already owned the data projectors.

The main attraction for the audience is the novelty. While some may have been exposed to illuminated globes, typically arrays of LED lights, having a recognisable and seamless image around the dome is quite unusual.

What did your setup look like?

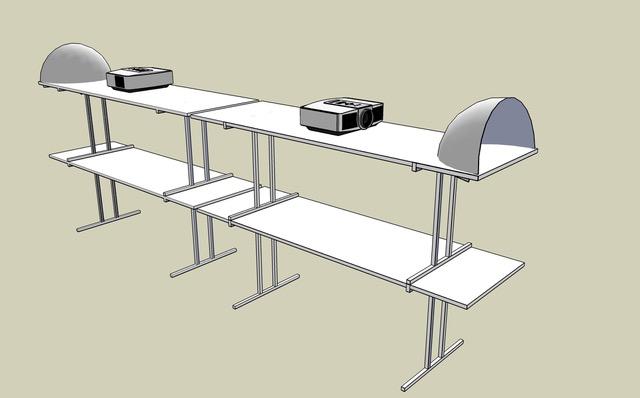

The Hydrodome consists of a semi-transparent hemispherical surface 4m in diameter raised about 1.5m above the ground… The audience can move around the outside and the surface in this installation consists of a single coherent image.

Inside the dome are two bright projectors, two spherical mirrors, a laptop and a semi-random arrangement of tables, stacking blocks, cables, etc.

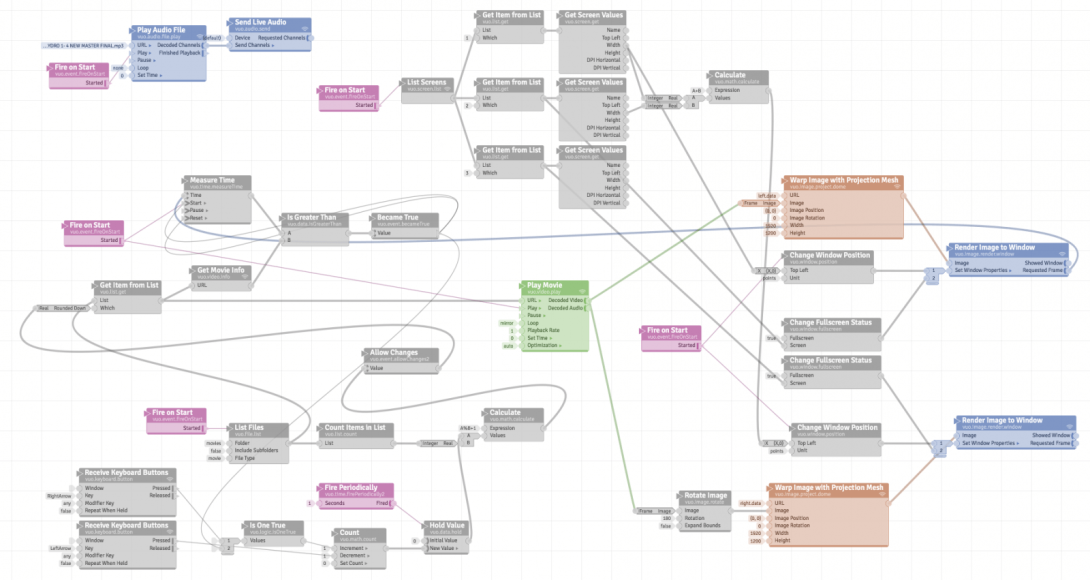

The Vuo playback is quite simple, while there were variations it largely involves choosing a video clip, dicing it in half, warping it (calibration performed with another application) and sending to the two projectors (each a display on the laptop).

Why did you use two video feeds and two projectors instead of one?

In dome environments, due to the large surface area, it is usual to not have bright enough projectors. One can buy brighter projectors but like most technology on the edge, to get double of some quantity (light in this case) one often needs ten times the budget. And with increased projector brightness comes larger projector boxes, cooling and noise issues.

The traditional solution then is to simply use more projectors, each one doing a smaller part of the dome and the images blended together. The limiting factor here is cost but also system complexity as the number of projectors increases.

The spherical mirror projection approach is my invention from back in 2002, so the natural and cost effective solution seemed to be twin projectors each reflected off a spherical mirror. Each projector is responsible for half the dome and the result is blended across a mid line of the dome.

Did you use the brightness attribute in the Warp Image with Projection Mesh mesh file to blend between the two projectors?

Absolutely… Note that the use of the brightness attributes is not just to manage blending, it also allows one to adjust the relative brightness of the image in parts, for example those that might have a closer projector to screen distance or where optics may be focusing light.

How bright were the projectors?

Each projector is 5500 ANSI lumens which may seem bright for consumer grade projectors but it still would only work for after sunset and without direct lights on the dome.

What make/model was the dome you projected onto?

Finding a dome with the characteristics we wanted was not easy, this is not a normal application where the dome is to present fulldome immersive content to an interior audience. In the end the dome was acquired from a Chinese manufacturer of an extensive range of all sorts of weird and wonderful inflatable structures, namely Guangzhou Bestfun Inflatable Co.

Why did you choose Vuo for this project?

Vuo was chosen probably for the same reason other people choose it, if it provides the tools required then it is an extremely efficient way to put audio/visual experiences together. It would have been a daunting task to otherwise drive two projectors from a variety of video content while accounting for warping/blending and the associated alignment. Note that the alignment was performed in an external tool of my own which exports the mesh for warping/blending in my original file format which [Kineme] PBMesh and the Vuo node were designed for.

Further, on the night [of Kaya] we were able to make changes live. For example there was a mode in the playback where random clips were chosen from a directory of movies. They played from a random starting point and for a random duration. This results in a sequence where people don’t notice an otherwise repeating loop. There was a dance performance by the same performer as some of the fisheye video, so during that performance we were able to ensure the correct video was playing without interrupting the flow of the video. And lastly, on the night we noticed that the best sequences were those with high colour saturation, a few minutes later and adding a node to the pipeline allowed us to dynamically adjust the colour saturation (and other parameters) on the fly. None of this would have been possible with a fixed composited movie that simply looped.

A small point perhaps but one of the things Vuo seems to do very well is the management of screens, certainly this is something that Quartz Composer handled poorly. This composition automatically brought two fullscreen windows up on two external displays attached to a standard Laptop, while retaining the laptop display for editing, changing the composition, tweaking values all done live. I could make (some) changes to the composition without the audience necessarily knowing anything unusual was occurring.

Do you have plans to use Vuo in future projects?

Like many people I used Quartz Composer extensively for a wide range of projects, some of which one might imagine are its intended use, namely prototyping of graphical pipelines. Other uses are what it became known for such as interactive performance and general graphical processing. And still other applications which might surprise some, for example as a control system to manage the presentation of stereoscopic sports related stimuli (videos) to athletes to measure performance, this is in a sports science research context.

I absolutely intend to use Vuo in the future, indeed am using the GLSL shader node extensively it at the time of writing to process high resolution video from extremely wide angle fisheye lenses. The trick with something like Vuo is knowing it sufficiently, and keeping up with developments, so that one can know when it is the right tool for the task at hand.

Comments